Google’s recently released Pixel 8 has a stunning new feature built into its camera application––face fixing. Everyone has taken pictures of a group in which just one person is looking elsewhere or their eyes are closed. With the assistance of machine learning, this will not be a problem for much longer.

How It Works

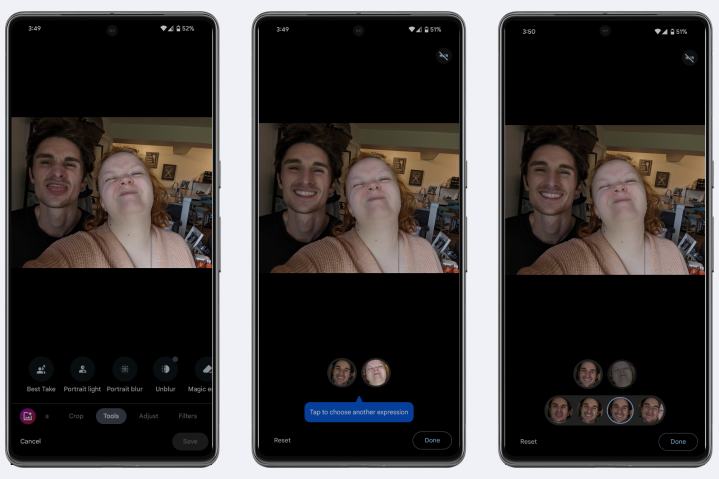

The feature is called Best Take, and how it works is that it looks at all the pictures you click within a continuous time interval, and it gives choices on how possible combinations of them will look. In a higher level extension from Photoshop, it merges together parts of pictures to make the final product the way the user wants it. This does mean, however, that in order for all group members to be looking at the camera in the end product, each individually needs to be looking at the camera in at least one of the photos taken during that time interval.

“People don’t want to capture reality. They want to capture beautiful images. The whole image processing pipeline in smartphones is meant to produce good-looking images–not real ones… The fact that we see sharp colorful images is because our brain can reconstruct information and infer even missing information… So, you may complain cameras do ‘fake stuff’, but the human brain actually does the same thing in a different way.”

University of Cambridge Professor Rafal Mantiuk

Possible Concerns

As with most developments in contemporary AI technology, there are ethical questions that arise. By piecing together an image from various moments, the feature is inadvertently capturing a “moment” which never existed. Since the time of their invention, photographs have always been used to chronicle time, events, and memories. This new adaptation could change the perception of reliving a memory through a photo.

Additionally, another question comes up about who has the ability to make these edits. Who decides whether or not someone’s face in an image is not what they want it to be? For now, these edited images are constrained to only being picked from that time interval, but with any new extendable feature, it is only a matter of time until another company or individual creates a more comprehensive version. Then, can anyone just completely change what a person is showing at that moment? Can the gestures and emotions of that occasion be up to interpretation by anyone who wants to edit the moment?

Takeaway

Surpassing current camera features like face filters and enhancements, this addition is an exciting new development for AI enthusiasts and general Pixel users alike. Even with Photoshop and other image correcting softwares available, the definition of a photograph may soon see a transformation as more sophisticated features come out.